Hadoop Architecture

To Understand Hadoop Architecture, Firstly we know about Basic Concept of Hadoop.

What is Hadoop

The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. Hadoop is a framework that allows you to first store Big Data in a distributed environment, so that, you can process it parallel. There are basically three components in Hadoop , If we understood these three components then it will be easy to us to understood Hadoop Architecture

- HDFS: (Hadoop distributed File System) it allows us to store data of various formats across a cluster. It states that the files will be broken into blocks and stored in nodes over the distributed architecture.

- YARN:-The second one is YARN, for resource management in Hadoop.

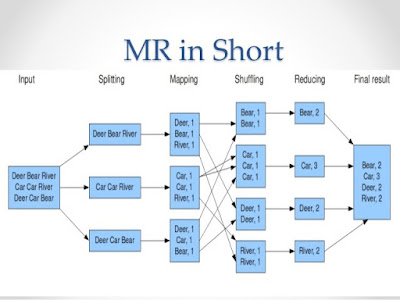

- Map Reduce: a parallel processing software framework.

Hadoop Architecture

The Hadoop architecture is a package of the file system, MapReduce engine and the HDFS (Hadoop Distributed File System). The MapReduce engine can be MapReduce/MR1 or YARN/MR2.

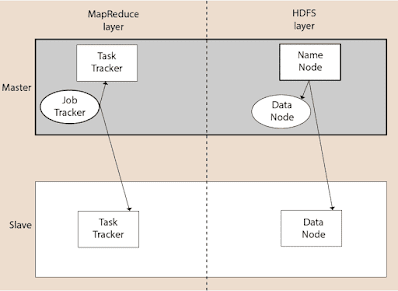

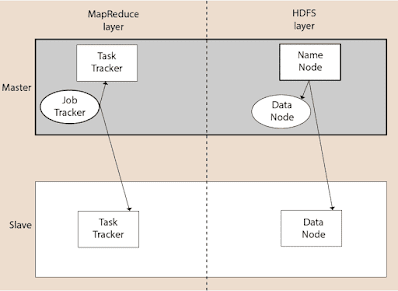

A Hadoop cluster consists of a single master and multiple slave nodes. The master node includes Job Tracker, Task Tracker, NameNode, and DataNode whereas the slave node includes DataNode and TaskTracker.

A Hadoop cluster consists of a single master and multiple slave nodes. The master node includes Job Tracker, Task Tracker, NameNode, and DataNode whereas the slave node includes DataNode and TaskTracker.

- Hadoop Distributed File System:-The Hadoop Distributed File System (HDFS) is a distributed file system for Hadoop. It contains a master/slave architecture. This architecture consist of a single NameNode performs the role of master, and multiple DataNodes performs the role of a slave. Both NameNode and DataNode are capable enough to run on commodity machines. The Java language is used to develop HDFS. So any machine that supports Java language can easily run the NameNode and DataNode software.

- NameNode:- It is a single master server exist in the HDFS cluster. As it is a single node, it may become the reason of single point failure. It manages the file system namespace by executing an operation like the opening, renaming and closing the files. It simplifies the architecture of the system.

- DataNode:- The HDFS cluster contains multiple DataNodes. Each DataNode contains multiple data blocks. These data blocks are used to store data. It is the responsibility of DataNode to read and write requests from the file system's clients. It performs block creation, deletion, and replication upon instruction from the NameNode.

Related Other Post

- What is Hadoop

- Distributed System

- Vision of Cloud Computing

- Seven Step Model of Migration into cloud

- Operating-system-NTA-NET-Notes

- Data-Structure-NTA-NET-Notes

- Flip-Flop-Digital-electronics

- Artificial intelligence Tutorial

- Job Tracker:-The role of Job Tracker is to accept the MapReduce jobs from client and process the data by using NameNode. In response, NameNode provides metadata to Job Tracker.

- Task Tracker:- It works as a slave node for Job Tracker. It receives task and code from Job Tracker and applies that code on the file. This process can also be called as a Mapper.

- MapReduce Layer:-The MapReduce comes into existence when the client application submits the MapReduce job to Job Tracker. In response, the Job Tracker sends the request to the appropriate Task Trackers. Sometimes, the TaskTracker fails or time out. In such a case, that part of the job is rescheduled. It also called Yarn.

1 Comments

java online training

ReplyDeletejava training

if u have any doubts please let me know,