Hadoop:-

To Understand About Hadoop, we have to know about what is big data, so here i am discussing about big data firstly.

What is Big data

- Big Data is a collection of data that is huge in volume.

- Big data is also a data but with huge size.

- Big data is collection of large and complex data sets that cannot be processed using traditional computing techniques

Source of big data

- Social networking sites: Facebook, Google, LinkedIn all these sites generates huge amount of data on a day to day basis as they have billions of users worldwide

- E-commerce site: Sites like Amazon, Flipkart, Alibaba generates huge amount of logs from which users buying trends can be traced.

- Weather Station: All the weather station and satellite gives very huge data which are stored and manipulated to forecast weather.

- Telecom company : Telecom giants like Airtel, Vodafone study the user trends and accordingly publish their plans and for this they store the data of its million users.

- Share Market: Stock exchange across the world generates huge amount of data through its daily transaction.

Types Of Big Data

- Structured:- the data that can be stored and processed in a fixed format is called structured data. like table with fixed attribute.

- Unstructured:-the data which has unknown form and cannot be stored in RDBMS and cannot be analyzed unless it is transformed into a structured format is called unstructured data. this type of data usually generated by humans. example:- natural language, voice, twitter posts, Wikipedia etc.

- Semi-structured:- semi structured data is a type of data which does not have a formal structure of a data model, i.e. a table definition in a relational DBMS ,but nevertheless it has some organizational properties like tags and other markers to separate semantic elements that make it easier to analyze. example:- HTML, XML, JSON files etc.

Hadoop:-

The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models.Related Other Post

- Distributed System

- Vision of Cloud Computing

- Seven Step Model of Migration into cloud

- Operating-system-NTA-NET-Notes

- Data-Structure-NTA-NET-Notes

- Flip-Flop-Digital-electronics

- Artificial intelligence Tutorial

Working Procedure

Hadoop is a framework that allows you to first store Big Data in a distributed environment, so that, you can process it parallel. There are basically three components in Hadoop- HDFS: (Hadoop distributed File System) it allows us to store data of various formats across a cluster. It states that the files will be broken into blocks and stored in nodes over the distributed architecture.

- YARN: Yet another Resource Negotiator is used for job scheduling and manage the cluster.it is used for for resource management in Hadoop.

- Map Reduce: a parallel processing software framework. This is a framework which helps Java programs to do the parallel computation on data using key value pair. The Map task takes input data and converts it into a data set which can be computed in Key value pair. The output of Map task is consumed by reduce task and then the out of reducer gives the desired result.

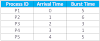

Example:-

Phases of MapReduce data flow

- Input reader:-The input reader reads the upcoming data and splits it into the data blocks of the appropriate size (64 MB to 128 MB). Each data block is associated with a Map function. Once input reads the data, it generates the corresponding key-value pairs. The input files reside in HDFS.

- Map function:-The map function process the upcoming key-value pairs and generated the corresponding output key-value pairs. The map input and output type may be different from each other.

- Partition function:-The partition function assigns the output of each Map function to the appropriate reducer. The available key and value provide this function. It returns the index of reducers.

- Shuffling and Sorting:-The data are shuffled between/within nodes so that it moves out from the map and get ready to process for reduce function. Sometimes, the shuffling of data can take much computation time. The sorting operation is performed on input data for Reduce function. Here, the data is compared using comparison function and arranged in a sorted form.

- Reduce function:-The Reduce function is assigned to each unique key. These keys are already arranged in sorted order. The values associated with the keys can iterate the Reduce and generates the corresponding output.

- Output writer:-Once the data flow from all the above phases, Output writer executes. The role of Output writer is to write the Reduce output to the stable storage.

1 Comments

I appreciate you taking the time and effort to share your knowledge. This material proved to be really efficient and beneficial to me. Thank you very much for providing this information. Continue to write your blog.

ReplyDeleteData Engineering Services

Artificial Intelligence Services

Data Analytics Services

Data Modernization Services

if u have any doubts please let me know,